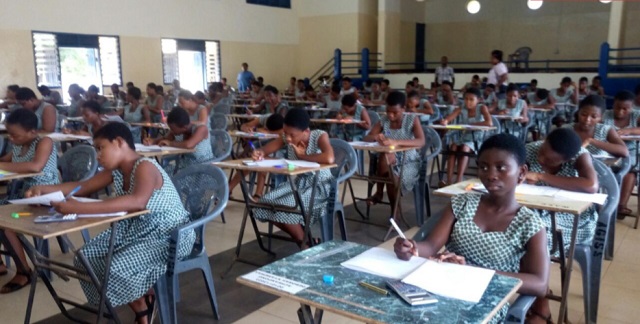

Our models can identify how much a particular variable affects students’ scores. That allows us to identify the most important demographic characteristics as they relate to the test results. For example, by looking at just one characteristic – the percentage of families in a given community living in poverty – we can explain almost 58 percent of the test’s score in eighth grade English language arts.

Our most recent study explored three years of test scores from grades six through eight in more than 300 New Jersey schools. We looked at the percentage of families in the community with income over US$200,000 a year, the percentage of people in a community in poverty and the percentage of people in a community with bachelor’s degrees. We found that we could predict the percent of students who scored proficient or above in 75 percent of the schools we sampled.

An earlier study that focused on fifth grade test scores in New Jersey predicted the results accurately for 84 percent of schools over a three-year period. Standardised tests Students’ scores on mandated standardized tests have been used to evaluate U.S. educators, students and schools since President George W. Bush signed the No Child Left Behind Act (NCLB) in 2002.

Although more than 20 states had previously instituted state testing in some grade levels by the late 1990s, NCLB mandated annual standardised testing in all 50 states. It required standardised mathematics and English language arts tests in grades three through eight and once in high school. State education officials also had to administer a standardised science test in fourth grade, eighth grade and once in high school.

The Obama administration expanded standardised testing through requirements in the Race to the Top grant program and by funding the development of two national standardised tests related to Common Core State Standards: Smarter Balanced Assessment Consortium (SBAC) and the Partnership for Assessment of Readiness of College and Careers (PARCC).

Forty-five states initially adopted the Common Core in some form. Approximately 20 are currently part of the PARCC or SBAC consortia. Key portions of Race to the Top applications required states use student test results to evaluate teachers and principals.

Smarter assessments

To be clear, the results of our study do not mean that money determines how much students can learn. That couldn’t be further from the truth. In fact, our results demonstrate that standardised tests don’t really measure how much students learn, or how well teachers teach, or how effective school leaders lead their schools. Such tests are blunt instruments that are highly susceptible to measuring out-of-school factors.

Though some proponents of standardised assessment claim that scores can be used to measure improvement, we’ve found that there’s simply too much noise. Changes in test scores from year to year can be attributed to normal growth over the school year, whether the student had a bad day or feels sick or tired, computer malfunctions, or other unrelated factors.

According to the technical manuals published by the creators of standardised assessments, none of the tests currently in use to judge teacher or school administrator effectiveness or student achievement have been validated for those uses. For example, none of the PARCC research, as provided by PARCC, addresses these issues directly. The tests are simply not designed to diagnose learning. They are simply monitoring devices, as evidenced by their technical reports.

The bottom line is this: Whether you’re trying to measure proficiency or growth, standardized tests are not the answer.

Though our results in several states have been compelling, we need more research on a national level to determine just how much test scores are influenced by out-of-school factors.

If these standardised test results can be predicted with a high level of accuracy by community and family factors, it would have major policy implications. In my opinion, it suggests we should jettison the entire policy foundation that uses such test results to make important decisions about school personnel and students. After all, these factors are outside the control of students and school personnel.

Although there are ideological disputes about the merits of standardised tests results, the science has become clearer. The results suggest standardised test results tell more about the community in which a student lives than the amount the student has learned or the academic, social and emotional growth of the student during a school year.

Although some might not want to accept it, over time, assessments made by teachers are better indicators of student achievement than standardised tests. For example, high school GPA, which is based on classroom assessments, is a better predictor of student success in the first year of college than the SAT.

This change would go a long way to providing important information about effective teaching, compared with a test score that has little to do with the teacher.

****

Christopher Tienken is Associate Professor of Education Leadership Management and Policy, Seton Hall University

The Independent Uganda: You get the Truth we Pay the Price

The Independent Uganda: You get the Truth we Pay the Price